model_ramp_baseline

Ramp Baseline Model for Building Footprint Segmentation

The Replicable AI for Microplanning (Ramp) deep learning model is a semantic segmentation one which detects buildings from satellite imagery and delineates the footprints in low-and-middle-income countries (LMICs) using satellite imagery and enables in-country users to build their own deep learning models for their regions of interest. The architecture and approach were inspired by the Eff-UNet model outlined in this CVPR 2020 Paper.

MLHub model id: model_ramp_baseline_v1. Browse on Radiant MLHub.

Training Data

- Ghana Source

- Ghana Labels

- India Source

- India Labels

- Malawi Source

- Malawi Labels

- Myanmar Source

- Myanmar Labels

- Oman Source

- Oman Labels

- Sierra Leone Source

- Sierra Leone Labels

- South Sudan Source

- South Sudan Labels

- St Vincent Source

- St Vincent Labels

Related MLHub Dataset

Ramp Building Footprint Datasets

Citation

DevGlobal (2022) “Ramp Baseline Model for Building Footprint Segmentation”, Version 1.0, Radiant MLHub. [Date Accessed] Radiant MLHub. https://doi.org/10.34911/rdnt.1xe81y

License

CC BY-NC 4.0

Creator

Contact

info@dev.global

Applicable Spatial Extent

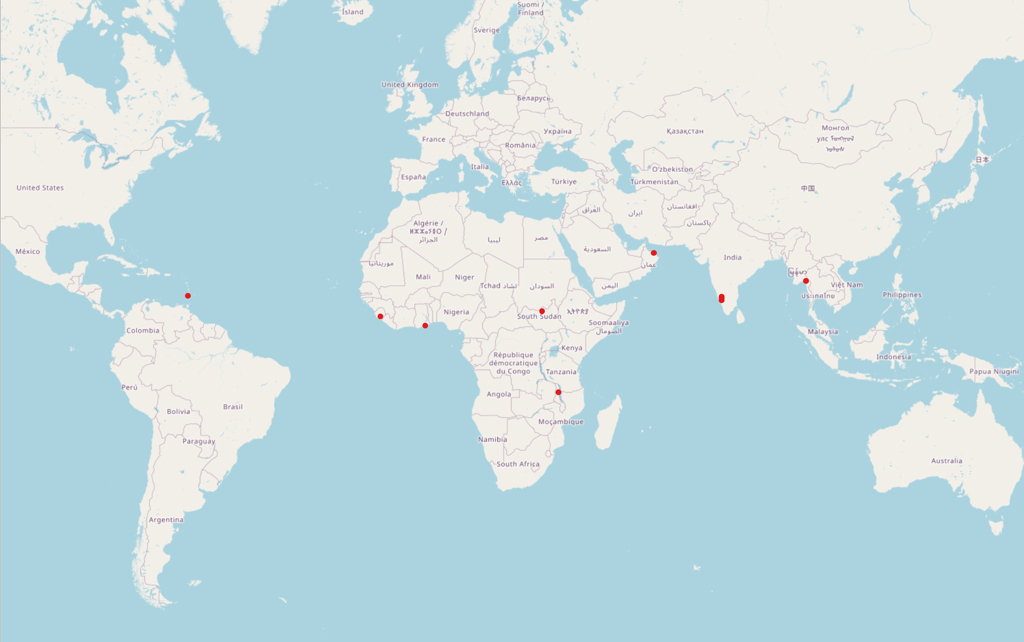

Download spatial_extent.geojson

© OpenStreetMap contributors

© OpenStreetMap contributors

Applicable Temporal Extent

| Start | End |

|---|---|

| 2007-10-01 | present |

Learning Approach

Supervised

Prediction Type

Segmentation

Model Architecture

Eff-UNet

Training Operating System

Linux

Training Processor Type

GPU

Model Inferencing

Review the GitHub repository README to get started running this model for new inferencing.

Methodology

This baseline building footprint detection model is trained to facilitate mapping building footprints in regions that are poorly mapped and using high resolution satellite imagery. The model was developed as part of the Replicable AI for Microplanning (Ramp) project. You can read a full documentation of the Ramp project, including the model training on the Ramp website. The model card is also accessible here.

Training

The model is designed to work with satellite imagery of 50 cm or higher spatial resolution. The training data for this model covers multiple regions across several Low and Middle Income Countries (LMIC) including Ghana, India, Malawi, Myanmar, Oman, Sierra Leone, South Sudan and St Vincent.

Two augmentation functions were applied to the training data: 1) Random Rotation, and 2) Random Change of Brightness, Contrast and Saturation (aka ColorJitter). Training uses a batch size of 16 with an Adam optimizer at a Learning Rate (LR) of 3E-04 and an early stopping function.

Model

This model is developed using the Eff-UNet model architecture outlined in this CVPR 2020 Paper. For a detailed architecture of the model, refer to Figures 3-5 in the paper.

Structure of Output Data

The model generates a multi-mask prediction including the following classes: background, buildings, boundary,close_contact. Output masks will have the same basename as the input chips, with the suffix pred.tif. The suffix pred.tif is used so that predicted masks will not be confused with truth masks.

These predictions are then post-processed to a binary mask for ‘building’ and

‘background’, and from there the polygons are delineated. Polygons smaller than

2 m2 are removed. The final prediction is one GeoJSON file for each

chip input to the model, with the suffix pred.geojson.